There are multiple approaches to getting your application to the Cloud, but many companies are going “all in” to take advantage of a cloud native microservices approach because it makes it easier to deploy, scale, enhance, and maintain applications. In this article, we use an example of the architecture design decisions for our anonymous email tool, DragonMail, to demonstrate some options and capabilities to consider when moving away from a monolithic to a microservice architecture utilizing the dotNet capabilities in AWS.

Strategic Planning

At the start, we looked for “quick wins” that we could implement right away and developed a longer-term strategy for breaking up our established monolithic architecture. One of our strategic goals was to build upon the expertise of our delivery team and current technology stack investment.

Deployment Considerations

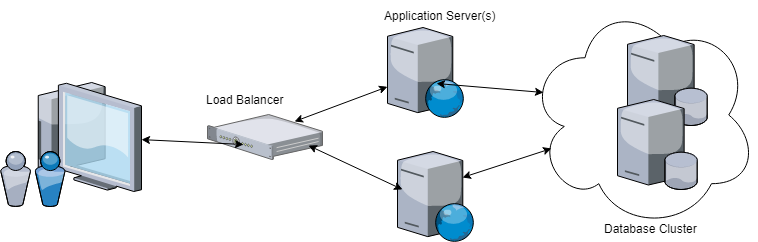

To start, here is a typical deployment of dotNet applications in the enterprise:

- Hosted on either a dedicated VM or shared environment (Side note: the available resources for the application is usually either overkill if provisioning is easy or strapped if provisioning is hard.)

- Load balanced, so there are at least 2 servers (plus a load balancer)

- Connected to some data store, most likely a relational database

- Monolithic application(s) deployed within IIS and/or Windows Service

- A (mostly) matching environment for UAT and a representative environment for QA and/or Development.

A rollout of the typical architecture often requires significant operations investment and still introduces single points of failure throughout the application, so we chose to utilize AWS microservices to scale our DragonMail dotNet application.

Developing the Architecture

AWS offers many paths for microservices, so we had to decide how to frame our architecture design. We looked for:

- Low cost/free hosting options

- Capabilities of AWS that best enable dotNet customers to utilize a microservice architecture

- AWS managed services over having to build and support our own custom infrastructure management

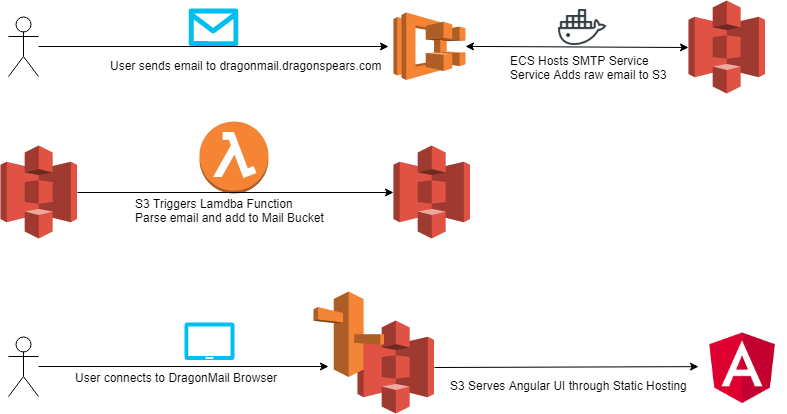

With those assumptions in mind, here is how we approached building the architecture for DragonMail. The main components were the SMTP Server, Mail Parser/Storage, and Anonymous Mail Reader.

1. SMTP Server

The SMTP Service was our first microservice and our first challenge. Ideally, we wanted to use the AWS API Gateway because it provides a scalable solution for HTTP services. However, since the SMTP Service requires TCP communication, the API Gateway wasn’t able to host this service.

We decided to go with another option in microservices: containers. Using the AWS managed container service, ECS, we created a dotNet Core container image to run our SMTP Service hosted on Linux. Not only did this reduce our ECS cost, but the managed container deployment mechanism also reduced our operations cost.

ECS fully supports the Docker registry (or you can use the private registry in AWS), so we now have a built-in deployment pipeline setup for our local development & testing utilizing the Windows/Visual Studio 2017 toolset. As a result, our team can rely on familiar technologies and development paradigms to help them quickly and easily ramp up.

2. Mail Parser/Data

Now that we had a microservice that could accept e-mail, we needed something to parse it. A monolithic approach would have the SMTP service parse and store the email to a tightly coupled data store, but the microservices approach reduced the responsibility of that service to only handle the SMTP handshake and store the raw email in a bucket in S3.

We configured this bucket to trigger a custom Lambda function, written in dotNet Core, that is responsible for parsing these emails. Once parsed, it writes them to another S3 bucket for something else to process. By splitting up each responsibility, we eliminated some of the system dependencies and created easily testable, repeatable, inputs and outputs for each microservice.

You may have noticed that we didn’t utilize a database, but instead chose to rely on S3 for those operations. This approach gives us the flexibility to add an additional microservice triggered on the parsed email S3 bucket, so we can further analyze the email as the business needs require it, such as providing more advanced reporting. Now we can chain different services with different responsibilities that are testable with repeatable input and outputs.

3. Anonymous Mail Reader

Finally, we needed to read these emails. If this were a typical dotNet application, we would probably have an ASP.Net website that was hosting the infrastructure for all the components identified so far. We decided to favor a more browser based UI, so we could go completely serverless and use static hosting on S3 to host an Angular UI.

This lightweight hosting reduced our UI hosting and operations cost to almost nothing (since it's all client side) while getting the scale of S3 & CloudFront. As requirements change and more complex behavior is needed, the UI can keep our static hosting and connect to other services in our microservice infrastructure.

In Summary

The microservices approach allowed us to break apart our original monolithic application into smaller, testable components. Based on the design decisions we’ve discussed, our architecture ended up looking like this:

With this architecture, we’ve reduced infrastructure and operations cost by utilizing ECS with dotNet Linux Containers, S3, and Lambda. Even with these drastic infrastructure changes, we’ve managed to keep our investment in our development methodology consistent for the delivery team and provide an opportunity for them to grow their capabilities.

Most importantly, for our customers, we’ve broken up our monolithic application so that new components and functionality no longer require taking the entire system offline. Instead, these features can be rolled out with the increased scalability, redundancy, and agility a cloud native microservice architecture provides.